Development¶

Here are some pointers for hacking on bezier.

Adding Features¶

In order to add a feature to bezier:

- Discuss: File an issue to notify maintainer(s) of the proposed changes (i.e. just sending a large PR with a finished feature may catch maintainer(s) off guard).

- Add tests: The feature must work fully on the following CPython versions: 2.7, 3.5 and 3.6 on both UNIX and Windows. In addition, the feature should have 100% line coverage.

- Documentation: The feature must (should) be documented with helpful doctest examples wherever relevant.

Running Unit Tests¶

We recommend using nox (nox-automation) to run unit tests:

$ nox -s "unit_tests(python_version='2.7')"

$ nox -s "unit_tests(python_version='3.5')"

$ nox -s "unit_tests(python_version='3.6')"

$ nox -s "unit_tests(python_version='pypy')"

$ nox -s unit_tests # Run all versions

However, pytest can be used directly (though it won’t manage dependencies or build extensions):

$ PYTHONPATH=src/ python2.7 -m pytest tests/

$ PYTHONPATH=src/ python3.5 -m pytest tests/

$ PYTHONPATH=src/ python3.6 -m pytest tests/

$ PYTHONPATH=src/ MATPLOTLIBRC=test/ pypy -m pytest tests/

Native Code Extensions¶

Many low-level computations have alternate implementations in Fortran.

When using nox, the bezier package will automatically be installed

into a virtual environment and the native extensions will be built during

install.

However, if you run the tests directly from the source tree via

$ PYTHONPATH=src/ python -m pytest tests/

some unit tests may be skipped. The unit tests for the Fortran implementations will skip (rather than fail) if the extensions aren’t compiled and present in the source tree. To compile the native extensions, make sure you have a valid Fortran compiler and run

$ python setup.py build_ext --inplace

$ # OR

$ python setup.py build_ext --inplace --fcompiler=${FC}

To actually make sure the correct compiler commands are invoked,

provide a filename as the BEZIER_JOURNAL environment variable and

then the commands invoked will be written there. The nox session

check_journal uses this journaling option to verify the commands

used to compile the extension on CircleCI.

Test Coverage¶

bezier has 100% line coverage. The coverage is checked

on every build and uploaded to coveralls.io via the

COVERALLS_REPO_TOKEN environment variable set in

the CircleCI environment.

To run the coverage report locally:

$ nox -s cover

$ # OR

$ PYTHONPATH=src/:functional_tests/ python -m pytest \

> --cov=bezier \

> --cov=tests \

> tests/ \

> functional_tests/test_segment_box.py

Slow Tests¶

To run unit tests without tests that have been (explicitly)

marked slow, use the --ignore-slow flag:

$ nox -s "unit_tests(python_version='2.7')" -- --ignore-slow

$ nox -s "unit_tests(python_version='3.5')" -- --ignore-slow

$ nox -s "unit_tests(python_version='3.6')" -- --ignore-slow

$ nox -s unit_tests -- --ignore-slow

These slow tests have been identified via:

$ ...

$ nox -s "unit_tests(python_version='3.6')" -- --durations=10

and then marked with pytest.mark.skipif.

Slow Install¶

Installing NumPy with PyPy can take upwards of two minutes, which makes it prohibitive to create a new environment for testing.

In order to avoid this penalty, the WHEELHOUSE environment

variable can be used to instruct nox to install NumPy from

locally built wheels when installing the pypy sessions.

To pre-build a NumPy wheel:

$ pypy -m pip wheel --wheel-dir=${WHEELHOUSE} numpy

The Docker image for the CircleCI test environment has already

pre-built this wheel and stored it in the /wheelhouse directory.

So, in the CircleCI environment, the WHEELHOUSE environment

variable is set to /wheelhouse.

Functional Tests¶

Line coverage and unit tests are not entirely sufficient to

test numerical software. As a result, there is a fairly

large collection of functional tests for bezier.

These give a broad sampling of curve-curve intersection, surface-surface intersection and segment-box intersection problems to check both the accuracy (i.e. detecting all intersections) and the precision of the detected intersections.

To run the functional tests:

$ nox -s "functional(python_version='2.7')"

$ nox -s "functional(python_version='3.5')"

$ nox -s "functional(python_version='3.6')"

$ nox -s "functional(python_version='pypy')"

$ nox -s functional # Run all versions

$ # OR

$ export PYTHONPATH=src/:functional_tests/

$ python2.7 -m pytest functional_tests/

$ python3.5 -m pytest functional_tests/

$ python3.6 -m pytest functional_tests/

$ MATPLOTLIBRC=test/ pypy -m pytest functional_tests/

$ unset PYTHONPATH

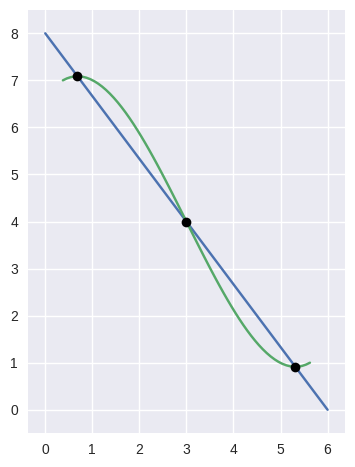

For example, the following curve-curve intersection is a functional test case:

and there is a Curve-Curve Intersection document which captures many of the cases in the functional tests.

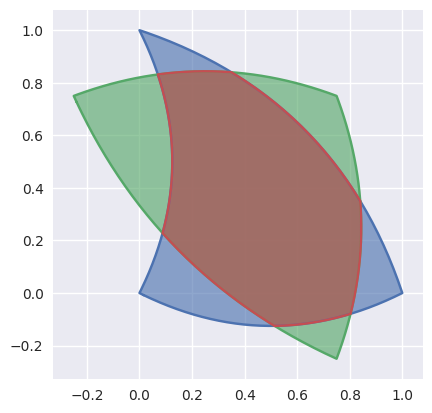

A surface-surface intersection functional test case:

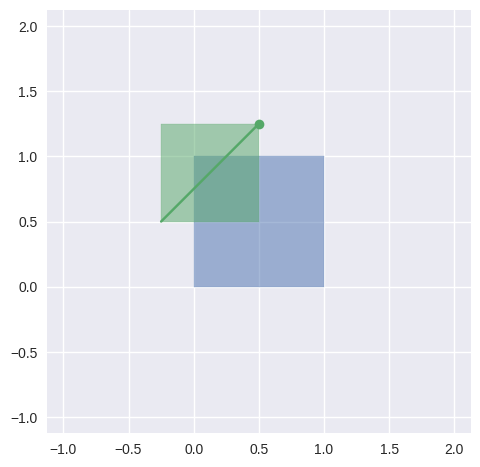

a segment-box functional test case:

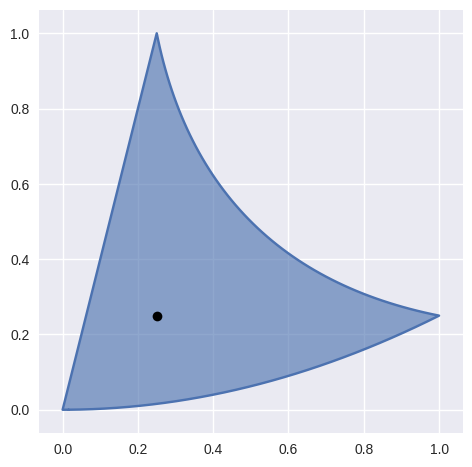

and a “locate point on surface” functional test case:

Functional Test Data¶

The curve-curve and surface-surface intersection test cases are stored in JSON files:

This way, the test cases are programming language agnostic and can be

repurposed. The JSON schema for these files are stored in the

functional_tests/schema directory.

Coding Style¶

Code is PEP8 compliant and this is enforced with flake8 and pylint.

To check compliance:

$ nox -s lint

A few extensions and overrides have been specified in the pylintrc

configuration for bezier.

Docstring Style¶

We require docstrings on all public objects and enforce this with

our lint checks. The docstrings mostly follow PEP257

and are written in the Google style, e.g.

Args:

path (str): The path of the file to wrap

field_storage (FileStorage): The :class:`FileStorage` instance to wrap

temporary (bool): Whether or not to delete the file when the File

instance is destructed

Returns:

BufferedFileStorage: A buffered writable file descriptor

In order to support these in Sphinx, we use the Napoleon extension. In addition, the sphinx-docstring-typing Sphinx extension is used to allow for type annotation for arguments and result (introduced in Python 3.5).

Documentation¶

The documentation is built with Sphinx and automatically

updated on RTD every time a commit is pushed to master.

To build the documentation locally:

$ nox -s docs

$ # OR (from a Python 3.5 or later environment)

$ PYTHONPATH=src/ ./scripts/build_docs.sh

Documentation Snippets¶

A large effort is made to provide useful snippets in documentation. To make sure these snippets are valid (and remain valid over time), doctest is used to check that the interpreter output in the snippets are valid.

To run the documentation tests:

$ nox -s doctest

$ # OR (from a Python 3.5 or later environment)

$ PYTHONPATH=src/ NO_IMAGES=True sphinx-build -W \

> -b doctest \

> -d docs/build/doctrees \

> docs \

> docs/build/doctest

Documentation Images¶

Many images are included to illustrate the curves / surfaces / etc. under consideration and to display the result of the operation being described. To keep these images up-to-date with the doctest snippets, the images are created as doctest cleanup.

In addition, the images in the Curve-Curve Intersection document and this document are generated as part of the functional tests.

To regenerate all the images:

$ nox -s docs_images

$ # OR (from a Python 3.5 or later environment)

$ export MATPLOTLIBRC=docs/ PYTHONPATH=src/

$ sphinx-build -W \

> -b doctest \

> -d docs/build/doctrees \

> docs \

> docs/build/doctest

$ python functional_tests/test_segment_box.py --save-plot

$ python functional_tests/test_surface_locate.py --save-plot

$ python functional_tests/make_curve_curve_images.py

$ python functional_tests/make_surface_surface_images.py

$ unset MATPLOTLIBRC PYTHONPATH

Continuous Integration¶

Tests are run on CircleCI and AppVeyor after every commit. To see which tests are run, see the CircleCI config and the AppVeyor config.

On CircleCI, a Docker image is used to provide fine-grained control over

the environment. There is a base python-multi Dockerfile that just has the

Python versions we test in. The image used in our CircleCI builds (from

bezier Dockerfile) installs dependencies needed for testing (such as

nox and NumPy).

Deploying New Versions¶

New versions are pushed to PyPI manually after a git tag is

created. The process is manual (rather than automated) for several

reasons:

- The documentation and README (which acts as the landing page text on

PyPI) will be updated with links scoped to the versioned tag (rather

than

master). - Several badges on the documentation landing page (

index.rst) are irrelevant to a fixed version (such as the “latest” version of the package). - The build badges in the README and the documentation will be changed to point to a fixed (and passing) build that has already completed (will be the build that occurred when the tag was pushed). If the builds pushed to PyPI automatically, a build would need to link to itself while being run.

- Wheels need be built for Linux, Windows and OS X. This process is becoming better, but is still scattered across many different build systems. Each wheel will be pushed directly to PyPI via twine.

- The release will be manually pushed to TestPyPI so the landing page can be visually inspected and the package can be installed from TestPyPI rather than from a local file.

Supported Python Versions¶

bezier explicitly supports:

Supported versions can be found in the nox.py config.

Versioning¶

bezier follows semantic versioning.

It is currently in major version zero (0.y.z), which means that

anything may change at any time and the public API should not be

considered stable.